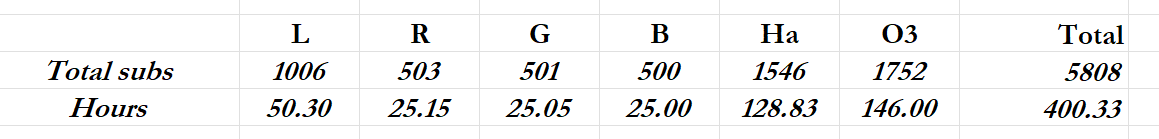

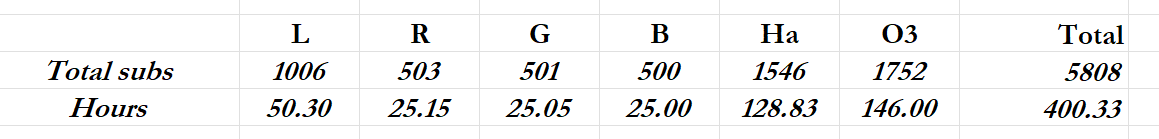

Recently, I've completed capture on a single frame with more than 400 hours of acquisition time--my longest ever, obtained only after suffering the pain equivalent to delivering a breach birth. It's a project I started in 2024, when I got 50 hours of data, and then completed this year by slogging through another 350 hours of data starting in January. 5808 subs for all filters. Here are the basic stats:

When I stacked the entire image, the results were less than pleasing. For example, using the Image Analysis-->SNR script in PI, the Ha master came up with an SNR of 53.38db. The Ha stack from last year, about 10 hours of Ha, came up with an SNR of 61.2. Results from all the other filter masters were very similar. The 10 hours of Ha from last year not only had a higher SNR, but also it just looked better and processed better than the 128 hours of data I bled for.

Okay, maybe I had some bad data in those 1546 Ha subs that I missed somehow with Blink and Subframe Selector. That's simply not the case, and here's how I know: I split the data up into chunks of 150 to 200 subs and stacked those chunks separately--eight of them. They each had an SNR of 75+ and all of them looked way better than the full stack of 1546 subs.

(Please note that I did both the full stack and the split stacks in both APP and WBPP. The results were very consistent)

After I stacked the 8 chunks of Ha data into 8 masters, I then stacked those 8 masters using PI's ImageIntegration process. The result was effing spectacular. An SNR of 89.66--and it looks like a million dollars--exactly what I was hoping for. I started processing it and was astounded at how much better it was than the full stack of 1546 subs.

(Again, these results were basically the same in both APP and WBPP)

So my question is: WTF?

Or better still: W. T. Holy. F?

Note: I'm sorry I can't share screen shots or raw files here. The image isn't finished and I don't want to disclose anything about what it is yet. So you'll have to trust me on these numbers and descriptions. But any insights could be helpful.

Without knowing anything at all about the stacking algorithms or the math involved, here are my theories--none of which I'm very confident in.

1. Somehow Mabula and the PI team both failed to account for extreme integration times and their algorithms simply aren't robust enough to handle such things

2. Somehow weighting is playing a role--perhaps weighting lesser quality data over higher quality data when enough lesser quality data is there

3. Rejection algorithms are playing an unexpected role over a very large sample size

All I know is that splitting this project up into ~200-sub stacks and then stacking the results of those chunks creates vastly--vastly superior results. I haven't been this discombobulated since November 5th. Please explain this to me if you can. If no one knows, then I'm just going to change my pre-processing workflow to stacking in chunks of no more than 250 subs, which will be a giant PITA.

When I stacked the entire image, the results were less than pleasing. For example, using the Image Analysis-->SNR script in PI, the Ha master came up with an SNR of 53.38db. The Ha stack from last year, about 10 hours of Ha, came up with an SNR of 61.2. Results from all the other filter masters were very similar. The 10 hours of Ha from last year not only had a higher SNR, but also it just looked better and processed better than the 128 hours of data I bled for.

Okay, maybe I had some bad data in those 1546 Ha subs that I missed somehow with Blink and Subframe Selector. That's simply not the case, and here's how I know: I split the data up into chunks of 150 to 200 subs and stacked those chunks separately--eight of them. They each had an SNR of 75+ and all of them looked way better than the full stack of 1546 subs.

(Please note that I did both the full stack and the split stacks in both APP and WBPP. The results were very consistent)

After I stacked the 8 chunks of Ha data into 8 masters, I then stacked those 8 masters using PI's ImageIntegration process. The result was effing spectacular. An SNR of 89.66--and it looks like a million dollars--exactly what I was hoping for. I started processing it and was astounded at how much better it was than the full stack of 1546 subs.

(Again, these results were basically the same in both APP and WBPP)

So my question is: WTF?

Or better still: W. T. Holy. F?

Note: I'm sorry I can't share screen shots or raw files here. The image isn't finished and I don't want to disclose anything about what it is yet. So you'll have to trust me on these numbers and descriptions. But any insights could be helpful.

Without knowing anything at all about the stacking algorithms or the math involved, here are my theories--none of which I'm very confident in.

1. Somehow Mabula and the PI team both failed to account for extreme integration times and their algorithms simply aren't robust enough to handle such things

2. Somehow weighting is playing a role--perhaps weighting lesser quality data over higher quality data when enough lesser quality data is there

3. Rejection algorithms are playing an unexpected role over a very large sample size

All I know is that splitting this project up into ~200-sub stacks and then stacking the results of those chunks creates vastly--vastly superior results. I haven't been this discombobulated since November 5th. Please explain this to me if you can. If no one knows, then I'm just going to change my pre-processing workflow to stacking in chunks of no more than 250 subs, which will be a giant PITA.