Mark Fox:

So my color camera can be a mono camera? That is the most unordinary sounding thing I ever heard in astrophotography.

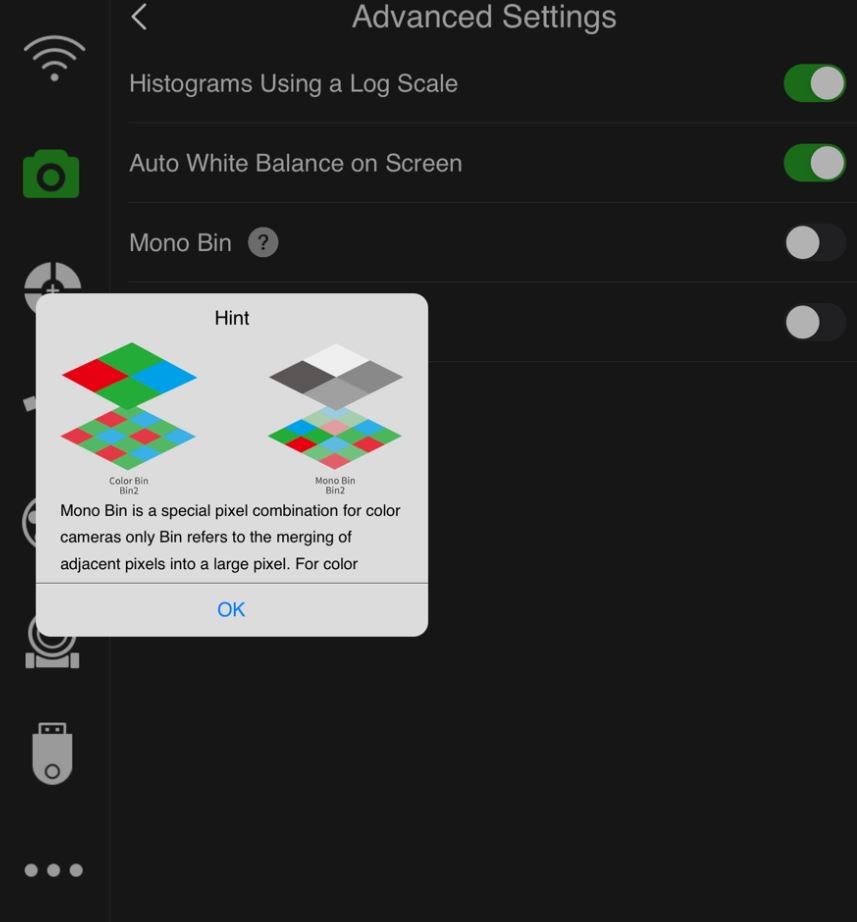

Yes, I can't think of anything more absurd than this setting.

Hey everyone,

I wanted to share a thought on a feature that I think is seriously underrated and could be a real game-changer for many of us.

I'm talking about quadrupling the Full Well Capacity on sensors like the IMX571, which goes from 51k to

204k electrons.

On paper, that sounds nice, but in practice, it means being able to shoot

10 to 15-minute subs without clipping the bright stars. Imagine the insane dynamic range: you can finally capture the core of M31 AND its faintest arms in the same sub, without having to mess with HDR or make compromises. The read noise becomes basically negligible.

But where it gets really brilliant is with this hybrid workflow that lets you get the best of both worlds with

a single camera:

- First, you capture your Color (RGB) layers in standard color mode at the sensor's native resolution. Simple and effective.

- Then, for the Luminance (L), you switch to monochrome mode. This mode activates the x4 Full Well, but it often works by binning (e.g., 2x2), which gives you an ultra-deep L frame but at a reduced resolution.

And here's the trick: When you stack your Luminance subs, you apply a

Drizzle (usually 2x). This process

reconstructs an L image that recovers the sensor's full native resolution!In the end, you can combine this high-resolution "drizzled" Luminance with the color layer you captured earlier. You get a final image that merges the incredible dynamic range of the mono mode with the simple acquisition of color. It’s the perfect solution for galaxies, for example.

Honestly, just for the ability to shoot 15-minute subs without clipping and to finally push the limits of your mount and your sky, I think it's worth considering.

What are your thoughts on this? Have any of you already tried this approach? I'm curious to hear your feedback.